Getting Beyond the Chat on Generative AI

Burning Questions: Getting Beyond the Chat on Generative AI

In brief:

● The proliferation of large language models has sparked widespread adoption and rapid innovation

● These models only know what they have been taught or scraped off the internet, presenting inherent risks, from misinformation to biases

● There is still a need for LLMs to be assessed, verified and checked against known true sources for them to be reliable and drive true value

The rapid adoption and evolution of generative AI over the last year have fundamentally shifted human-machine interactions of our age. But what can these models do – and not do – and should we be afraid?

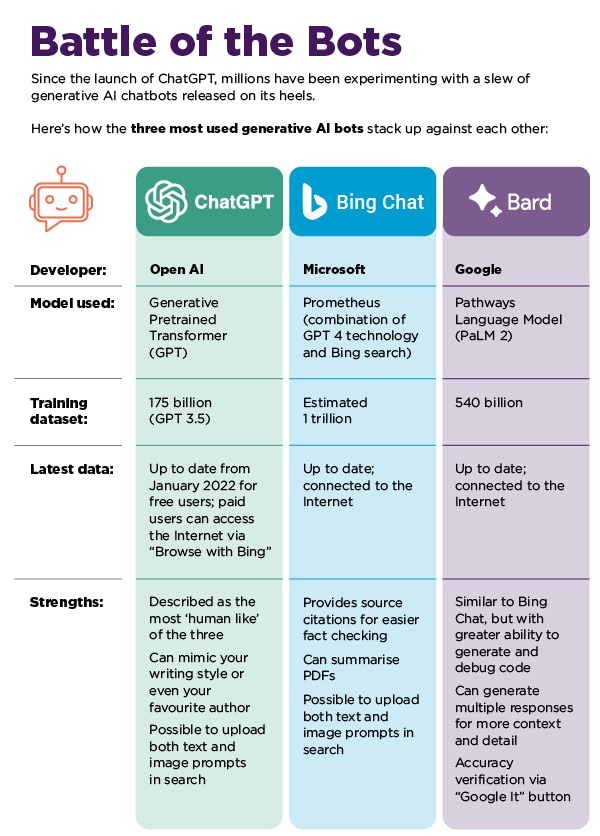

Generative AI has evolved at a dizzying pace over the last year, with an onslaught of large language models (LLM) like ChatGPT and Bard, which are able to respond with human-like dialogue and content.

The supersized “autocomplete” skills of generative AI, which can churn out everything from essays and poetry to artwork, have been met with both fascination and some measure of concern.

We put your Burning Questions about the good and bad of generative AI – and what the future might hold for it – to Temasek’s Head of AI Strategy and Solutions, Dr Michael Zeller, and Aicadium’s Senior Vice President of Data Science, Dr Dimitry Fisher, as well as to the LLMs themselves.

“It is the combination of human creativity and the ability of AI to aggregate enormous amounts of data that will be fascinating to watch moving forward.”

Dimitry Fisher, Aicadium’s Senior Vice President of Data Science

Q: Why is generative AI all over my newsfeed?

Michael: It was the launch of ChatGPT in November last year and its frenzied adoption that thrust generative AI into the spotlight. In just five days, ChatGPT reached 1 million users – by comparison, it took Netflix 3.5 years to reach the same number. The technology didn’t come out of nowhere, it actually has its roots in the 1960s, and the 2010s saw broader adoption of “deep learning”. The proliferation of data and improved algorithms now allow it to have human-like conversations and create life-like art. Millions are experimenting with this and other platforms, which is why everyone is talking about it.

Q: This seems like a good thing. Why are so many concerned?

ChatGPT: Despite my capabilities, I have limitations. I can produce misinformation because I don't genuinely understand the content. I may also amplify societal biases present in my training data. My ability to create realistic yet misleading content threatens information authenticity, and I lack an ethical framework for guidance.

Michael: ChatGPT is actually spot on, and that’s why blind trust is a bad idea. LLMs seem confident but they do get information wrong. Generative AI only knows what it has been told or scraped from the internet – including errors and biases. It learns the patterns in those texts, and then determines the probability of what the next word in a response should be. If it doesn’t have an answer, it will simply stitch one together.

Dimitry: Exactly. Even if humans provide LLMs with examples of how to “reason”, it is still humans teaching them what to say or not to say, what we call Reinforcement Learning by Human Feedback. It will be a massive, multi-year effort to get LLMs to generate and represent ideas, or think for themselves.

Q: Is the panic around generative AI displacing jobs justified?

Michael: Even if these models can’t think, the adoption and pace of evolution are bound to shake up some professions. That said, advancements don’t just eliminate some jobs, they create whole new industries, businesses, skill sets and careers. Ideally, we look for opportunities to “co-pilot” and augment human skills. We believe that our data systems should be human-centred, and that our AI systems should be designed as tools to help humans.

Bard: AI is already being used to automate tasks, make decisions, and provide insights in a wide range of industries. By learning to use AI, humans can… become more productive and efficient.

Q: How much do we really stand to gain from generative AI?

Michael: The opportunities are immense, including in areas such as content creation, software development, marketing and sales and customer service. At Temasek, for instance, we’ve rolled out an internal enterprise version of ChatGPT firm-wide, with rigorous cyber security measures and data access rights in place. Our teams are using it to streamline internal workflows, including for investment research, and as a productivity tool. Another area where generative AI can significantly improve productivity and have a major impact is software development. GitHub’s AI coding assistant GitHub Copilot, for instance, can more than halve the time software engineers take to complete a coding task. According to Bloomberg Intelligence, the generative AI market is set to reach US$1.3 trillion by 2032 from just US$40 billion in 2022, with a compound annual growth rate of 42% over the next decade. So there is a lot to gain here.

Dimitry: But challenges remain because generative AI is not an easy tool to integrate into corporate platforms and workflows. It’s not just about mitigating the hallucinations or confabulations. Making a model that solves real-world problems requires a much greater level of effort and expertise than deploying an open-source LLM or engineering the prompts. Not many companies have the expertise to do this well, or affordably.

Q: Where must generative AI go from here?

Bard: Generative AI must evolve to be more accurate, creative, fair, transparent, scalable, efficient, and secure. It should generate factually correct and relevant outputs, avoid bias and discrimination, and explain its conclusions. This will require developing models trained on diverse datasets and resistant to attacks.

Michael: Generative AI also needs to evolve so it is compliant with data protection laws, intellectual property regulations, ethical standards, and any industry-specific guidelines. For it to be truly valuable, we need to be able to verify its performance. The good news is that the tools and organisations that can do that are beginning to emerge, for instance, Singapore’s AI Verify Foundation.

Q: Could these technologies one day supersede humans?

ChatGPT: Humans have general intelligence, emotions, and consciousness. I don't. While I can generate text based on patterns, I lack real time learning, genuine creativity, and contextual understanding. I don't have beliefs or morals. Essentially, I process information quickly but lack the depth and nuance of human intelligence.

Dimitry: The world is built on the backs of creatives, doers, inventors, and dreamers. These generative models imitate the works that have been brought forth from the minds of daring creatives brave enough to chase the visions in their mind’s eye, or they mimic other AI-generated material – but they are not here to replace us. It is the combination of human creativity and the ability of AI to aggregate enormous amounts of data that will be fascinating to watch moving forward.